One of the biggest challenges companies are facing right now with AI systems

is how to deploy them securely. Especially when they’re trying to input

sensitive data that they don’t want leaked to the wrong people through an

LLM.

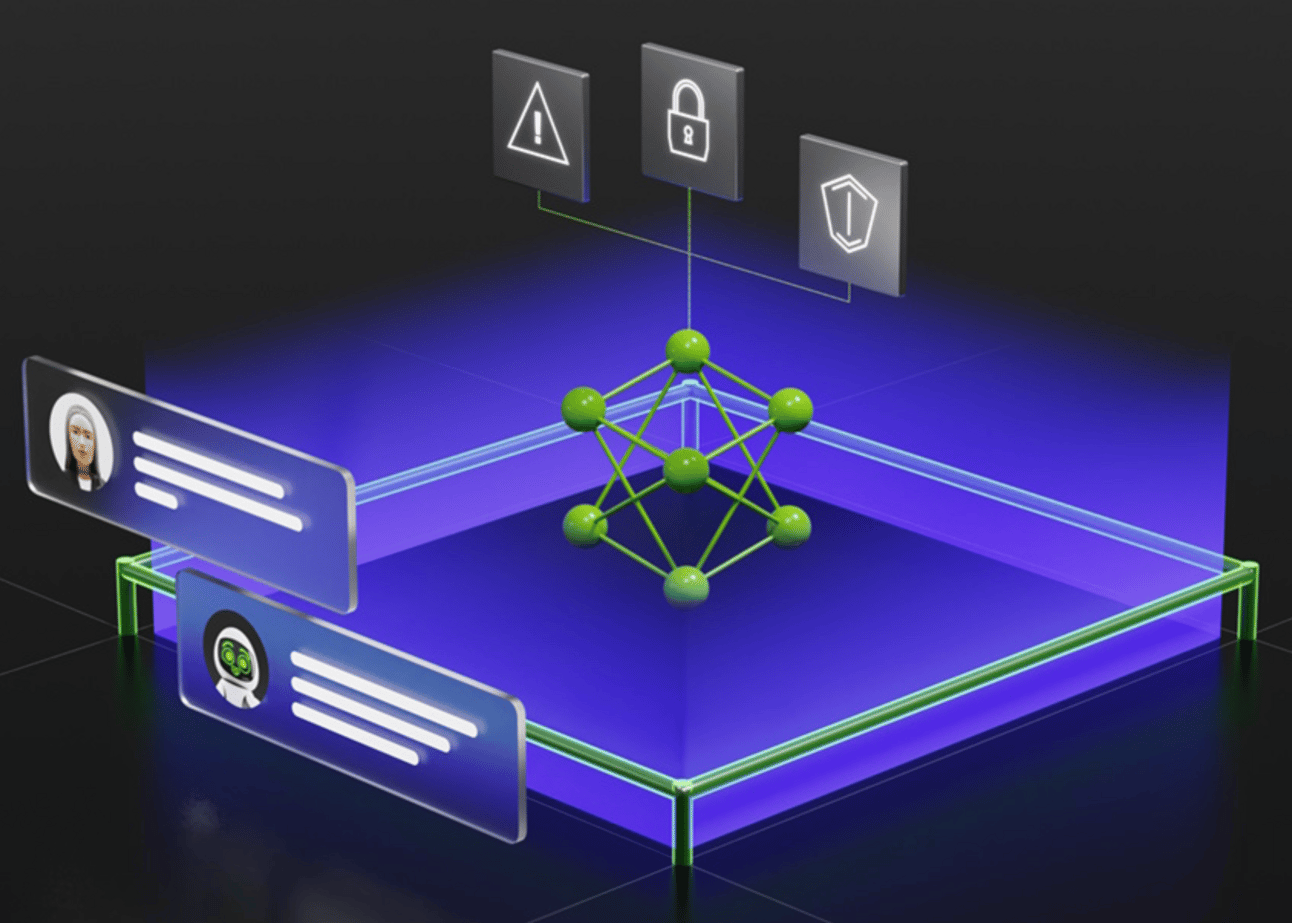

NVIDIA has a cool project called

NeMo Guardrails

that attempts to address this.

It’s an open-source framework for placing guardrails around an LLM so

that it won’t answer certain types of questions.

Adding Guardrails to your project

I saw a demo of the tool at the FullyConnected conference in San Francisco

(which was super fun, by the way), and the project seems quite easy to use.

NVIDIA demoed a business HR question coming into the system and returning an

undesired result. The speaker then added a rule saying not to answer this

type of question and it gave an LLM-manicured answer redirecting the

question the next time it was asked.

The question was something like:

What is the company’s operating income for this year?

…which they didn’t want to answer as the demo.

After the Guardrail was applied, it basically punted to HR, which was pretty

cool to see done live in a few seconds.

A Python example

So is this solved, then?

No, this won’t solve people asking things that the company would rather they

didn’t, and getting results they would rather they didn’t see.

And it definitely won’t solve prompt injection, which will likely be able to

bypass this type of thing fairly easily.

But it does address the problem significantly, and if solutions like this

can get us 7-90% of the way there, that has extraordinary value.

Great project, NVIDIA. Keep up the good work.

0 responses on "NVIDIA NeMo Guardrails"