102

OPERATING SYSTEMS PART 2 Introduction to Operating Systems is a three (3) credit unit course of twenty-two units. It deals …

TAKE THIS COURSE

148 SEATS LEFT

OPERATING SYSTEMS PART 2

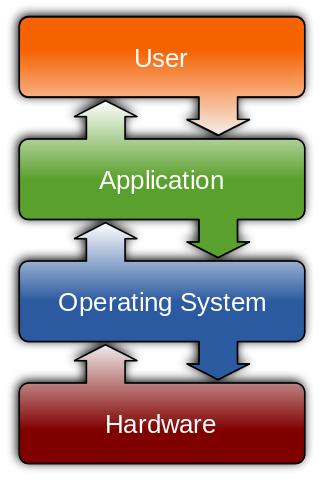

Introduction to Operating Systems is a three (3) credit unit course of twenty-two units. It deals with the various operating system design concepts and techniques. It also gives an insight into evolution of operating systems. Since operating systems is the most important software in the computer system, this course takes you through the various types of operating system depending on the environment, the design considerations, the functions performed by the operating system and how these are achieved/implemented either through hardware or software.. This course is divided into six modules. The first module deals with the basic introduction to the concept of Operating Systems such as definition and functions of operating system, history and evolution of operating system. The second module treats, extensively, the various types of operating system. The third module deals with concept of process management and discusses the concepts of Co- operating Processes, Threads, and CPU Scheduling. The fourth module discusses process synchronization issues such as Race Condition, Mutual Exclusion, Critical Section Problem, and other Classic Problems of Synchronization. The fifth module treats deadlock issues such as deadlock Characterization and methods for dealing with deadlocks. The last i.e. the sixth module discusses memory management functions of the operating system and issues such as memory management algorithms like paging, segmentation, contiguous memory allocation with their peculiar features were discussed. This Course Guide gives you a brief overview of the course contents, course duration, and course materials. What you will learn in this course The main purpose of this course is to provide the necessary tools for designing and Operating system. It makes available the steps and tools that will enable you to make proper and accurate decision on designs issues and the necessary algorithms for a particular computing environment. Thus, we intend to achieve this through the following: Course Aims- Introduce the concepts associated with Operating systems;

- Provide necessary tools for analysing a computing environment and choosing/designing appropriate operating system.

DOWNLOAD COURSE HERE

Course Currilcum

- OS2: Memory Management-Unit 3: Non-Contiguous Allocation FREE 01:00:00

- In the last university, you learnt about contiguous memory allocation in which it was highlighted that external fragmentation is a major problem with this method of memory allocation. It was also mentioned that compaction and non-contiguous logical address space are solutions to external fragmentation. In this unit we will go further to discuss some of the techniques for making the physical address space of a process non-contiguous such as paging, segmentation, etc. 2.0 Objectives At the end of this unit, you should be able to: • Describe paging • Describe segmentation • Explain the differences between paging and segmentation • State the advantages and disadvantages of both paging and segmentation • Describe a method for solving the problems of both paging and segmentation

- OS2: Memory Management-Unit 2: Memory Allocation Techniques FREE 00:40:00

- Introduction As you have learnt from the previous unit, memory management is essential to process execution which is the primary job of the CPU. The main memory must accommodate both the operating system and the various user processes. You therefore need to allocate different parts of the main memory in the most efficient way possible. In this unit, therefore, you will about the some memory management algorithms such as contiguous memory allocation and its different flavours. Also, the problems that may arise from contiguous memory allocation (fragmentation) will be discussed in this unit. 2.0 Objectives At the end of this unit, you should be able to: Describe contiguous memory allocation Describe the various variants of contiguous memory allocation such as best-fit, worst-fit, and first-fit Distinguish between internal and external fragmentation Describe methods of solving external fragmentation

- OS2: Memory Management-Unit 1: Memory Management Fundamentals Unlimited

- OS2: Deadlocks-Unit 2: Methods for Dealing with Deadlocks Unlimited

- As you have seen in the previous unit, for a deadlock to occur, each of the four necessary conditions must hold. You were also introduced to some of the methods for handling a deadlock situation. In this unit you will be fully exposed to deadlock prevention and deadlock avoidance approaches. As discussed before, deadlock prevention is all about ensuring that at least one of the four necessary conditions cannot hold, we will elaborate further by examining each of the four conditions separately. Deadlock avoidance is an alternative method for avoiding deadlocks which takes care of some of the shortcomings of deadlock-prevention such as low device utilization and reduced system throughput. In this unit, you will therefore learn how some of the algorithms for deadlock prevention, deadlock avoidance and deadlock detection and recovery are implemented. 2.0 Objectives • At the end of this unit you should be able to: • Describe deadlock prevention • Explain what is meant by deadlock avoidance • Describe Banker’s algorithm • Describe Resource-Allocation graph algorithm • Explain what is meant by safe state • Describe Deadlock lock detection algorithms and how to recover from deadlock

- OS2: Deadlocks-Unit 1: Deadlock Characterization Unlimited

- In a multiprogramming environment, several processes may compete for a finite number of resources. A process requests resources; if the resources are not available, at that time, the process enters a wait state. Waiting processes may never again change state, because the resources they have requested are held by other waiting processes. This situation is called deadlock. We have already mentioned this briefly in module 4 in connection with semaphores. In this module, you will be taken through methods that an operating system can use to prevent or deal with deadlocks Objectives At the end of this unit, you should be able to: Define deadlock State the necessary conditions for deadlock to occur Describe Resource-Allocation graph Explain how it can be used to describe deadlocks Describe some of the methods for handling deadlocks.

- OS2: Process Synchronization-Unit 4: Critical Section Problem Unlimited

- Consider a system consisting of n processes {P0, P1,…, Pn-1}. Each process has a segment of code called critical section, in which the processes may be changing common variables, updating a table, writing a file, etc. The important feature of the system is that, when one process is executing, in its critical section, no other process is to be allowed to execute in its critical section. Therefore the execution of the critical section by the processes is mutually exclusive in time. The critical section problem is to design a protocol that the processes can use to cooperate. Each process must request permission to enter its critical section. The section of code implementing this request is the entry section. The critical section may be followed by an exit section. The remaining code is the remainder section. do { entry section critical section exit section remainder section } while(1); Figure 1.1 General structure of a typical process Pi A solution to the critical section problem must satisfy the following three requirements: 1. Mutual Exclusion: if process Pi is executing in its critical section, then no other processes can be executing in their critical sections. 2. Progress: If no process is executing in its critical section and some processes wish to enter their critical sections, then only those processes that are not executing in their remainder section can participate in the decision on which will enter its critical sectionnext, and this selection cannot be postponed indefinitely. 3. Bounded Waiting: there exists a bound on the number of times that other processes are allowed to enter their critical sections after a process has made request to enter its critical section and before that request is granted. Based on these three requirements, we will discuss some solutions to critical section problem in this unit. 2.0 Objectives At the end of this unit you should be able to: • Explain the critical section problem • State the different levels of critical section • Define semaphores • Define monitors • Distinguish between monitors and semaphores

- OS2: Process Synchronization-Unit 3: Mutual Exclusion Unlimited

- In the previous units of this module you have been introduced you to some pertinent concepts in process synchronization. This unit will further expose you to another important concept in process synchronization which is mutual exclusion. It is an algorithm that is often used in concurrent programming to avoid the simultaneous use of a common resource by pieces of computer code known as critical section (this will be discussed in this next unit. 2.0 Objectives At the end of this unit, you should be able to: • Describe what you understand by mutual exclusion • Describe ways to enforce mutual exclusion

- OS2: Process Synchronization-Unit 2: Synchronization Unlimited

- Synchronization refers to one of two distinct, but related concepts: synchronization of processes, and synchronization of data. Process synchronization refers to the idea that multiple processes are to join up or handshake at a certain point, so as to reach an agreement or commit to a certain sequence of action while Data synchronization refers to the idea of keeping multiple copies of a dataset in coherence with one another, or to maintain data integrity. Process synchronization primitives are commonly used to implement data synchronization. In this unit you are going to be introduced to process synchronization. 2.0 Objectives At the end of this unit, you should be able to: Define process synchronization Describe non-blocking synchronization Explain the motivation for non-blocking synchronization Describe various types of non-blocking synchronization algorithms

- OS2: Process Synchronization-Unit 1: Race Condition Unlimited

- A race condition or race hazard is a flaw in a system or process whereby the output of the process is unexpectedly and critically dependent on the sequence or timing of other events. The term originates with the idea of two signals racing each other to influence the output first. Race conditions arise in software when separate processes or threads of execution depend on some shared state. 2.0 Objectives At the end of this unit you should be able to: • Define Race condition • Describe some real life examples of race condition • Describe computer security in view of race condition

Related Courses

Harnessing Social Media & Multimedia Content Production

UGX 100,800Original price was: UGX 100,800.UGX 50,400Current price is: UGX 50,400. per 365 days 182EGC: EDITING AND GRAPHICS OF COMMUNICATION

UGX 1,080,000Original price was: UGX 1,080,000.UGX 900,000Current price is: UGX 900,000. 100